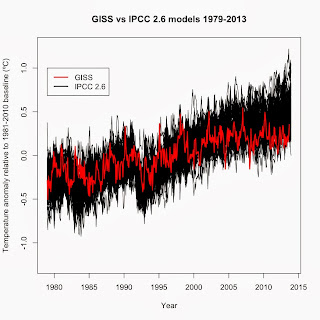

One of the dominant memes among climate deniers are that climate models are inaccurate. While true, particularly since 1998 (see Fyfe et al. 2013 ), that fact doesn't mean that global warming isn't happening or that global warming is due to a natural cycle and not CO 2 as many deniers claim. For those leaps of logic to be true, the entire field of radiative physics, 152 years of experiments, and 40+ years of satellite observations would all have to be wrong. Nor does it mean that climate isn't as sensitive to changes in radiative forcing as multiple studies have shown it to be (i.e. Paleosens 2013 ). What it means is far more complex. To illustrate this complexity, I compared IPCC AR5 climate models with surface temperatures (GISS). The AR5 models were run with four scenarios, labeled RCP 2.6, RCP 4.5, RCP 6.0, and RCP 8.5. Data for each scenario, along with global temperature data, AMO, PDO, etc. are available at Climate Explorer . The RCP scenarios span the rang